生物语言模型

Biological Language Model

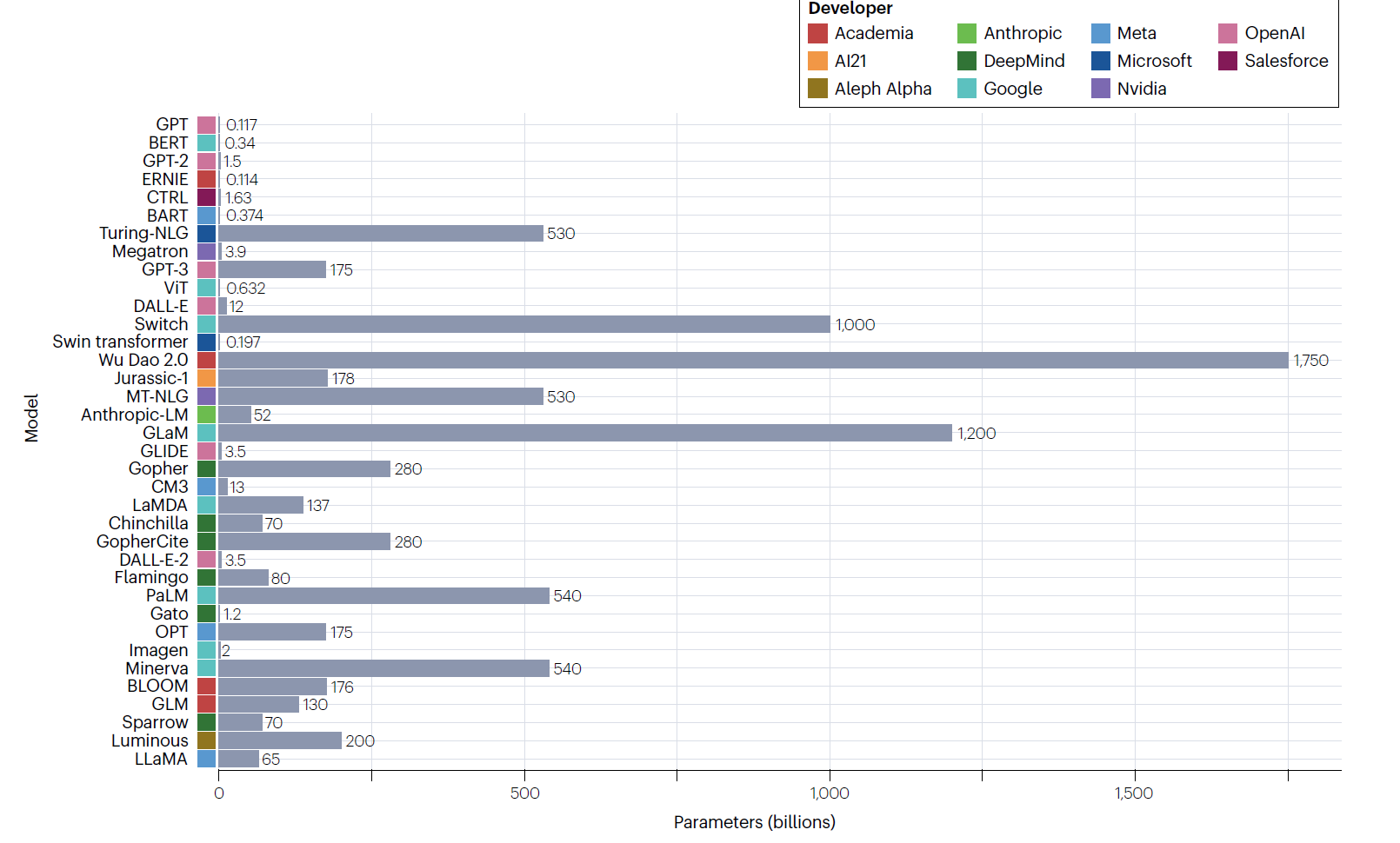

像 GPT-4 这样的大型语言模型因其对自然语言的惊人掌握而席卷了世界。 然而,大语言模型(LLM)最重要的长期机会将需要一种完全不同类型的语言:生物学语言。

过去一个世纪,生物化学、分子生物学和遗传学研究进展的长征中出现了一个引人注目的主题:事实证明,生物学是一个可破译、可编程、在某些方面甚至是数字系统。

DNA 仅使用四个变量——A(腺嘌呤)、C(胞嘧啶)、G(鸟嘌呤)和 T(胸腺嘧啶)来编码地球上每个生物体的完整遗传指令。 将此与现代计算系统进行比较,现代计算系统使用两个变量(0 和 1)对世界上所有的数字电子信息进行编码。 一个系统是二元系统,另一个是四元系统,但这两个系统在概念上有惊人的重叠; 这两个系统都可以正确地被认为是数字化的。

Large language models like GPT-4 have taken the world by storm for their amazing mastery of natural language. However, the most important long-term opportunity for large language model (LLM) will require an entirely different kind of language: the language of biology. A striking theme has emerged from the long march of research advances in biochemistry, molecular biology, and genetics over the past century: Biology, it turns out, is a decipherable, programmable, and in some respects even digital system.

DNA uses only four variables—A (adenine), C (cytosine), G (guanine), and T (thymine)—to encode the complete genetic instructions for every living organism on Earth. Compare this to modern computing systems, which encode all digital electronic information in the world using two variables (0 and 1). One system is binary and the other is quaternary, but the two systems have surprising conceptual overlap; both systems can rightly be considered digital.